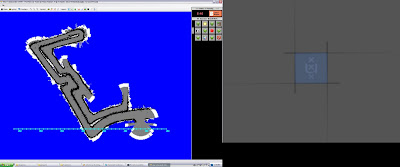

1st pic: Omnicam WSM No Rejectionfilter: Route is from the middle to the exit of the maze

2nd pic: Official groundtruth deadreckoning laser map from the same route

3rd pic: Omnicam QWSM Rejectionfiler set at 1.25 meters

4th pic: Laser QWSM same route as 3rd pic

I've created the outlier rejectionfilter and i've set it at 1.25 meters.

Rejectionfilter works as follows:

- Compute averagedistance from all omnicamranges

- Maxboundary and Minboundary are set at averagedistance +/- Rejectiondistance(1.25m)

- Go through the omnicamranges and for every distance higher or lower than the Max/Min boundary respectively will get his value set to either MaxRange or MinRange.

Rejectionfilter is to make sure the scanmatching algorithms are working better because they are sensitive to outliers. I've tried WSM + rejectionfilter but that didn't seem to work. QWSM + Rejectionfilter is working you can see it at the 3rd pic.