The problem with the formula is that it works good at close range but very bad at higher ranges. Yesterday Arnoud gave me a better image server that can get image resolutions till up to 1600x1200 pixels.

This has it's advantages because when there's an off by one pixel error, which can certainly be expected, the error of the resulting distance is not too damaging. The distance error can be reduced to for example 10 cm instead of 40-50 cm on a low resolution image.

The computer isn't fast enough to run 1600x1200 pixels so i've decided to run it at 1024x768 which it could. I've also implemented an error propagation yesterday but it doesn't seem to be very helpful.

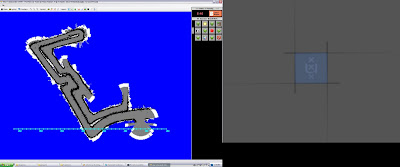

I've been experimenting with the distance formula and i've found out that it can accurately work to up to 4 meters with the omnicam I am using. Therefore the range of the omnicam will be 4 meters. Arnoud told me that will be ok because the average laser sensor also has a 4 meter range. I've tested the omnicam with a 1024x768 resolution and a 4 meter range with only drawing point correspondences, the result is the above picture.

The robot started at the downright corner and i've decided to head south first. You can see that it works pretty good but at the end it kind of broke down, it lost its self localization. This must because at that time I was driving in pretty narrow passages. I have to set a good minimum range to avoid this problem.

I've cleaned up the code: It is revision 1881 at the moment.

I will now start to setup my experiments, here is a list of what I can test:

1) Evaluate the measured distances of the omnicam

1.1 Laser scanner measurements will be used as ground truth

1.2 Give the error rate of how much the omnicam was of, in percentage for example at least something statistical

2) Evaluate a created map of an omnicam against a created map of a laser

2.1) Use the real map to evaluate by hand (or automatic if it exists)

2.2) Find the differences in the maps of the sensors.

Optional 2.3) Use another test environment

3) Show the advantages of the omnicam compared to a laser

3.1) Find situations where the omnicam is better (Examples: Holes in the floor)

Optional 3.2) Test the hypothesis in USARSim

Optional 3.3) Evaluate how well the omnicam is dealing with the situation

Optional 4) Let a real robot with omnicam drive around at science park

To test the robot in different environments I will need to know how to create new color histograms which I hopefully will find out tomorrow.